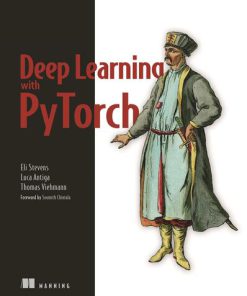

Deep Learning with PyTorch Build Train and Tune Neural Networks Using Python Tools 1st Edition by Eli Stevens, Luca Antiga, Thomas Viehmann ISBN 1617295264 9781617295263

$50.00 Original price was: $50.00.$25.00Current price is: $25.00.

Deep Learning with PyTorch Build, Train, and Tune Neural Networks Using Python Tools 1st Edition by Eli Stevens, Luca Antiga, Thomas Viehmann – Ebook PDF Instant Download/Delivery: 1617295264, 978-1617295263

Full download Deep Learning with PyTorch Build, Train, and Tune Neural Networks Using Python Tools 1st Edition after payment

Product details:

ISBN 10: 1617295264

ISBN 13: 978-1617295263

Author: Eli Stevens, Luca Antiga, Thomas Viehmann

Every other day we hear about new ways to put deep learning to good use: improved medical imaging, accurate credit card fraud detection, long range weather forecasting, and more. PyTorch puts these superpowers in your hands, providing a comfortable Python experience that gets you started quickly and then grows with you as you, and your deep learning skills, become more sophisticated.

Deep Learning with PyTorch teaches you how to implement deep learning algorithms with Python and PyTorch. This book takes you into a fascinating case study: building an algorithm capable of detecting malignant lung tumors using CT scans. As the authors guide you through this real example, you’ll discover just how effective and fun PyTorch can be.

Key features

• Using the PyTorch tensor API

• Understanding automatic differentiation in PyTorch

• Training deep neural networks

• Monitoring training and visualizing results

• Interoperability with NumPy

Audience

Written for developers with some knowledge of Python as well as basic linear algebra skills. Some understanding of deep learning will be helpful, however no experience with PyTorch or other deep learning frameworks is required.About the technology

PyTorch is a machine learning framework with a strong focus on deep neural networks. Because it emphasizes GPU-based acceleration, PyTorch performs exceptionally well on readily-available hardware and scales easily to larger systems.

Eli Stevens has worked in Silicon Valley for the past 15 years as a software engineer, and the past 7 years as Chief Technical Officer of a startup making medical device software. Luca Antiga is co-founder and CEO of an AI engineering company located in Bergamo, Italy, and a regular contributor to PyTorch.

Deep Learning with PyTorch Build, Train, and Tune Neural Networks Using Python Tools 1st Table of contents:

Part 1: Core PyTorch

1. Introducing Deep Learning and the PyTorch Library

- 1.1 The Deep Learning Revolution

- 1.2 PyTorch for Deep Learning

- 1.3 Why PyTorch?

- 1.3.1 The Deep Learning Competitive Landscape

- 1.4 An Overview of How PyTorch Supports Deep Learning Projects

- 1.5 Hardware and Software Requirements

- 1.5.1 Using Jupyter Notebooks

- 1.6 Exercises

- 1.7 Summary

2. Pretrained Networks

- 2.1 A Pretrained Network for Image Recognition

- 2.1.1 Obtaining a Pretrained Network

- 2.1.2 AlexNet

- 2.1.3 ResNet

- 2.1.4 Ready, Set, Almost Run

- 2.1.5 Run!

- 2.2 A Pretrained Model that Fakes It Until It Makes It

- 2.2.1 The GAN Game

- 2.2.2 CycleGAN

- 2.2.3 Turning Horses into Zebras

- 2.3 A Pretrained Network that Describes Scenes

- 2.3.1 NeuralTalk2

- 2.4 Torch Hub

- 2.5 Conclusion

- 2.6 Exercises

- 2.7 Summary

3. It Starts with a Tensor

- 3.1 The World as Floating-Point Numbers

- 3.2 Tensors: Multidimensional Arrays

- 3.2.1 From Python Lists to PyTorch Tensors

- 3.2.2 Constructing Our First Tensors

- 3.2.3 The Essence of Tensors

- 3.3 Indexing Tensors

- 3.4 Named Tensors

- 3.5 Tensor Element Types

- 3.5.1 Specifying the Numeric Type with

dtype - 3.5.2 A

dtypefor Every Occasion - 3.5.3 Managing a Tensor’s

dtypeAttribute

- 3.5.1 Specifying the Numeric Type with

- 3.6 The Tensor API

- 3.7 Tensors: Scenic Views of Storage

- 3.7.1 Indexing into Storage

- 3.7.2 Modifying Stored Values: In-Place Operations

- 3.8 Tensor Metadata: Size, Offset, and Stride

- 3.8.1 Views of Another Tensor’s Storage

- 3.8.2 Transposing without Copying

- 3.8.3 Transposing in Higher Dimensions

- 3.8.4 Contiguous Tensors

- 3.9 Moving Tensors to the GPU

- 3.9.1 Managing a Tensor’s Device Attribute

- 3.10 NumPy Interoperability

- 3.11 Generalized Tensors Are Tensors, Too

- 3.12 Serializing Tensors

- 3.12.1 Serializing to HDF5 with

h5py

- 3.12.1 Serializing to HDF5 with

- 3.13 Conclusion

- 3.14 Exercises

- 3.15 Summary

4. Real-World Data Representation Using Tensors

- 4.1 Working with Images

- 4.1.1 Adding Color Channels

- 4.1.2 Loading an Image File

- 4.1.3 Changing the Layout

- 4.1.4 Normalizing the Data

- 4.2 3D Images: Volumetric Data

- 4.2.1 Loading a Specialized Format

- 4.3 Representing Tabular Data

- 4.3.1 Using a Real-World Dataset

- 4.3.2 Loading a Wine Data Tensor

- 4.3.3 Representing Scores

- 4.3.4 One-Hot Encoding

- 4.3.5 When to Categorize

- 4.3.6 Finding Thresholds

- 4.4 Working with Time Series

- 4.4.1 Adding a Time Dimension

- 4.4.2 Shaping the Data by Time Period

- 4.4.3 Ready for Training

- 4.5 Representing Text

- 4.5.1 Converting Text to Numbers

- 4.5.2 One-Hot Encoding Characters

- 4.5.3 One-Hot Encoding Whole Words

- 4.5.4 Text Embeddings

- 4.5.5 Text Embeddings as a Blueprint

- 4.6 Conclusion

- 4.7 Exercises

- 4.8 Summary

5. The Mechanics of Learning

- 5.1 A Timeless Lesson in Modeling

- 5.2 Learning is Just Parameter Estimation

- 5.2.1 A Hot Problem

- 5.2.2 Gathering Some Data

- 5.2.3 Visualizing the Data

- 5.2.4 Choosing a Linear Model

- 5.3 Less Loss is What We Want

- 5.3.1 From Problem Back to PyTorch

- 5.4 Down Along the Gradient

- 5.4.1 Decreasing Loss

- 5.4.2 Getting Analytical

- 5.4.3 Iterating to Fit the Model

- 5.4.4 Normalizing Inputs

- 5.4.5 Visualizing (Again)

- 5.5 PyTorch’s

autograd: Backpropagating All Things- 5.5.1 Computing the Gradient Automatically

- 5.5.2 Optimizers à la Carte

- 5.5.3 Training, Validation, and Overfitting

- 5.5.4

AutogradNits and Switching It Off

- 5.6 Conclusion

- 5.7 Exercise

- 5.8 Summary

6. Using a Neural Network to Fit the Data

- 6.1 Artificial Neurons

- 6.1.1 Composing a Multilayer Network

- 6.1.2 Understanding the Error Function

- 6.1.3 All We Need is Activation

- 6.1.4 More Activation Functions

- 6.1.5 Choosing the Best Activation Function

- 6.1.6 What Learning Means for a Neural Network

- 6.2 The PyTorch

nnModule- 6.2.1 Using

__call__Rather thanforward - 6.2.2 Returning to the Linear Model

- 6.2.1 Using

- 6.3 Finally, a Neural Network

- 6.3.1 Replacing the Linear Model

- 6.3.2 Inspecting the Parameters

- 6.3.3 Comparing to the Linear Model

- 6.4 Conclusion

- 6.5 Exercises

- 6.6 Summary

7. Telling Birds from Airplanes: Learning from Images

- 7.1 A Dataset of Tiny Images

- 7.1.1 Downloading CIFAR-10

- 7.1.2 The Dataset Class

- 7.1.3 Dataset Transforms

- 7.1.4 Normalizing Data

- 7.2 Distinguishing Birds from Airplanes

- 7.2.1 Building the Dataset

- 7.2.2 A Fully Connected Model

- 7.2.3 Output of a Classifier

- 7.2.4 Representing the Output as Probabilities

- 7.2.5 A Loss for Classifying

- 7.2.6 Training the Classifier

- 7.2.7 The Limits of Going Fully Connected

- 7.3 Conclusion

- 7.4 Exercises

- 7.5 Summary

8. Using Convolutions to Generalize

- 8.1 The Case for Convolutions

- 8.1.1 What Convolutions Do

- 8.2 Convolutions in Action

- 8.2.1 Padding the Boundary

- 8.2.2 Detecting Features with Convolutions

- 8.2.3 Looking Further with Depth and Pooling

- 8.2.4 Putting It All Together for Our Network

- 8.3 Subclassing

nn.Modulefor a Convolutional Network - 8.4 Training the Convolutional Model

- 8.5 A Summary of What You Learned

- 8.6 Exercises

- 8.7 Summary

9. The Power of Transfer Learning

- 9.1 What Is Transfer Learning?

- 9.2 Transfer Learning in PyTorch

- 9.2.1 Fine-Tuning a Pretrained Model

- 9.2.2 Using a Pretrained Model for Feature Extraction

- 9.3 Conclusion

- 9.4 Exercises

- 9.5 Summary

People also search for Deep Learning with PyTorch Build, Train, and Tune Neural Networks Using Python Tools 1st:

introduction to deep learning with pytorch datacamp github

deep learning with pytorch a 60 minute blitz

learn deep learning with pytorch

machine learning with pytorch and scikit

applied deep learning with pytorch

Tags:

Eli Stevens,Luca Antiga,Thomas Viehmann,Deep,Learning,PyTorch,Build,Train,Tune Neural,Networks,Python Tools 1st

You may also like…

Computers - Computer Science

Computers - Artificial Intelligence (AI)

Computers - Computer Science

Computers - Computer Science

Mathematics - Mathematical Statistics

Computers - Artificial Intelligence (AI)

Computers - Artificial Intelligence (AI)

Beginning Anomaly Detection Using Python-Based Deep Learning: With Keras and PyTorch Sridhar Alla

Computers - Artificial Intelligence (AI)

Computers - Computer Science